Context engineering is just software engineering for LLMs

Tony Holdstock-Brown· 8/21/2025 · 4 min read

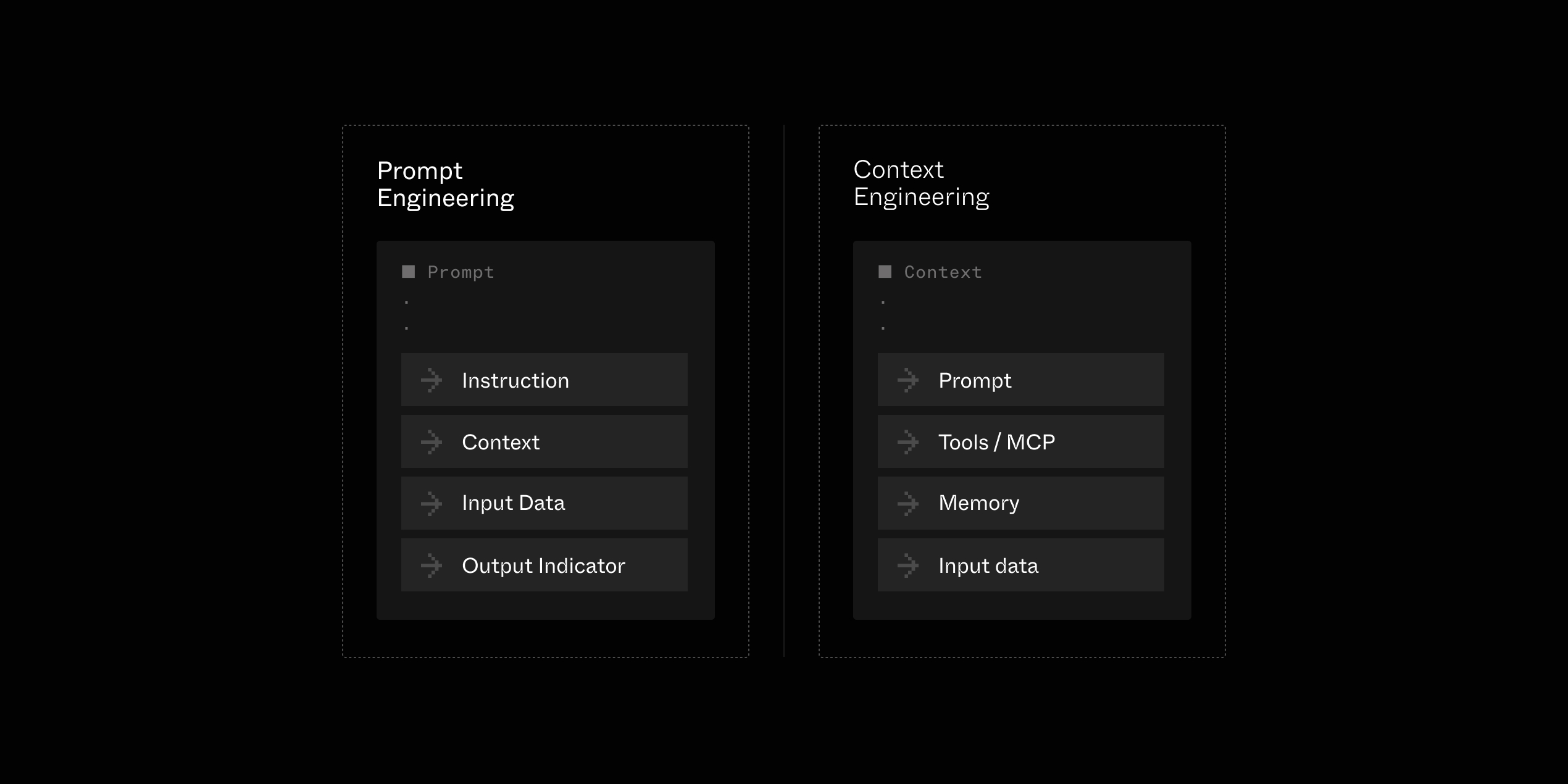

Over the past year, no new concept has been powerful enough to overshadow Prompt Engineering, from “Flow Engineering" to “Agentic". Why has prompt engineering been so strong? Was it a wording ("Agentic" making it hard to distinguish its technical definition from its use cases) or a timing issue (who put AI Agents in production six months ago?), impossible to say.

With the widespread adoption of MCP, reasoning models, and agentic products like Cursor, “Context Engineering" might be the most important concept to emerge with AI in the last few years, providing an excellent definition for building agentic systems.

Your LLM app doesn't need better prompts; it needs better context

Context Engineering's arrival is not a matter of being trendy, but more of a matter of solving the right problem. While Prompt Engineering's famous CoT (Chain of thoughts) prompt technique initially served as a salvation for hallucinations, it is now integrated into models as reasoning.

Creating agentic systems is no longer about giving the LLM the right prompt but about providing it with a suitable set of tools, memory, and data to enable it to build its context — and managing how that context evolves over time.

As Philip Schmid stated, how well AI Agents will perform depends on their context quality:

With the rise of Agents it becomes more important what information we load into the “limited working memory". We are seeing that the main thing that determines whether an Agents succeeds or fails is the quality of the context you give it. Most agent failures are not model failures anyemore, they are context failures.

A good quality context depends on multiple factors:

- Provide the right tools for the job to accomplish the task: how many tools? How generic or specific should a tool be? Does the tool definition help the LLM select it?

- Maintaining state and memory as the Agents ' loop: How can the LLM access its memory? Which state should be pruned from the state, and when?

- Gathering the use case-specific data via integrations: The more precise context, the better. Can a Personal assistant agent help you without accessing your emails and agenda?

- Structuring, augmenting, and formatting the retrieved data in an ingestible way: The data passed to LLMs should be easy to interpret (ex, length and YAML over JSON)

Context Engineering is an attempt at standardizing the architectural best practices of building agentic systems. Unlike Prompt Engineering, it isn't a set of techniques but a set of architectural principles, not without recalling some Software Engineering principles, showcasing the maturity of AI applications and their need for production-grade architecture.

Context engineering is all about orchestration.

Context Engineering is defined as:

“building dynamic systems to provide the right information and tools in the right format such that the LLM can plausibly accomplish the task."

The challenges of Context Engineering are similar to those of Software Engineering.

As most of Context Engineering's layers are commoditized (with multiple memory providers available, from Pinecone to MongoDB, and MCP standardized tools for design), the focus shifts from selecting the right tool for each layer to integrating them effectively.

Zooming out, Context Engineering primarily focuses on developing workflows around your Agents to create the optimal context pipeline for data gathering and classification, short and long-term memory retrieval before passing it to LLMs, along with associated tools.

Unlike MCP, which is a protocol coming with an implementation, the Context Engineering principles will pave the way to multiple flavors (like in Software Engineering). While some companies advocate for opinionated and rigorous frameworks, like the Zend Framework in the early days of PHP, we are firm believers that a flexible approach of Context Engineering is the quickest path to put AI Agents into users' hands.

The keystone of Context Engineering is a flexible and robust orchestration layer that connects LLM apps to various data sources, from 3rd party APIs and products, to provide the richest context to your Agents, for example, to answer your emails in full autonomy (which requires access to calendar, emails, and tools to perform actions).

We believe that future Agents will be deeply integrated with their users' environment, providing quick and secure access to relevant context when necessary.

Don't trust our words, trust what our customers built with Inngest:

-

See how Day AI built a CRM that can reason, providing tailored Assistants powered by the most advanced Context Engineering architecture.

-

Windmill built an AI Manager Agent that integrates with companies' tool suite and interacts with employees via Slack to automate reporting.